LAST UPDATED September 2, 2021

Last year ThreatX announced enhanced bot detection and mitigation capabilities which enabled us to identify and stop malicious bots from accessing our customers’ applications. In this post we present a recent case where we have been able to use these features, namely active bot interrogation, to drastically reduce the volume of possible bot requests sent by suspicious source entities, reducing the number of useless or undesirable requests processed by the customer application and mitigating bot threats like credential stuffing, account takeover (ATO), and fraudulent transactions.

On Malicious Bots

Over 20% of all traffic to web applications comes from malicious bots, per Distil Networks’ 2018 Bad Bot Report. These range in sophistication from basic crawlers and scrapers after content and pricing data, to bots emulating real browsers and performing more advanced attacks like ATO, credential stuffing, and account creation.

Simple bot attacks can often be mitigated with traditional WAF functionality ‚Äì an aggressive bot scraping content isn’t terribly difficult to distinguish from human users of the web application. A known suspicious or malicious User-Agent can be blocked immediately. A group of IP addresses attempting to login to your web application repeatedly or sending multiple malicious requests in a short period of time can be identified by volume alone. Unfortunately, many of the bots we see today are more advanced.

Advanced malicious bots try much harder to look like human web application traffic. They may hide behind a network of tens of thousands of anonymous proxy servers and compromised systems to widely distribute the sources from which their traffic is seen, defeating volume-based detection. Advanced bots may also use headless browsers which can easily defeat User-Agent based detection and include other features to fool WAFs and web applications into thinking they are in fact a normal human user.

Identifying Suspicious Entities

ThreatX profiles the traffic to our customer sites and identifies the source entities which exhibit suspicious behavior. Sometimes this just means detecting and blocking OWASP Top 10 threats like SQL injection or attempts to exploit known vulnerabilities. An entity performing these attacks is quickly identified as a vulnerability scanner and blocked, but other entities sit in the gray area between blatantly malicious and perfectly benign.

That’s where behavioral profiling comes in ‚Äì tracking entities which exhibit some of the behaviors and characteristics of malicious traffic but not enough to make a decision without more information.

Take, for example, an entity sending the following User-Agent string with their requests:

Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/43.0.2357.125 Safari/537.36

This entity represents itself as being a Chrome browser running on Windows 7. There is nothing inherently suspicious about running Windows 7 (though the OS is near end-of-life), and certainly nothing suspicious about using Chrome, the most popular browser worldwide by a significant margin.

The Chrome version number though, 43.0.2357.125, is four years out of date. Why would a legitimate user be running a browser version released in June 2015? Chrome updates are still released for Windows 7 and the browser includes an auto-update feature to ensure the vast majority of users are running the most current version.

Based on this we can consider the outdated Chrome browser to be suspicious, but without any more information we also don’t want to start blocking traffic. ThreatX behavioral profiling allows us to tag the entities with this User-Agent and begin tracking them for further analysis.

Isolating and Blocking Bots with Interrogation

Active bot interrogation enables us to challenge these suspicious entities to determine if they are a real, human, user with multiple techniques. These techniques include tests of the entity’s characteristics and capabilities like support for redirection and cookie storage, javascript-based challenges and browser fingerprint, and testing how the suspicious entity handles other deceptive responses.

Many bots may be able to fake a User-Agent string, but few are configured to follow redirects, accept and properly present cookies, and fully load and process all page elements. Fewer still can pass the javascript-based challenges, bot fingerprinting tests, and deception tests we provide during the interrogation process. When a bot fails interrogation, their traffic is blocked and the ThreatX behavioral profiling engine is immediately updated with their results.

In a recent case we were able to block over 95% of potential bot traffic using advanced interrogation techniques.

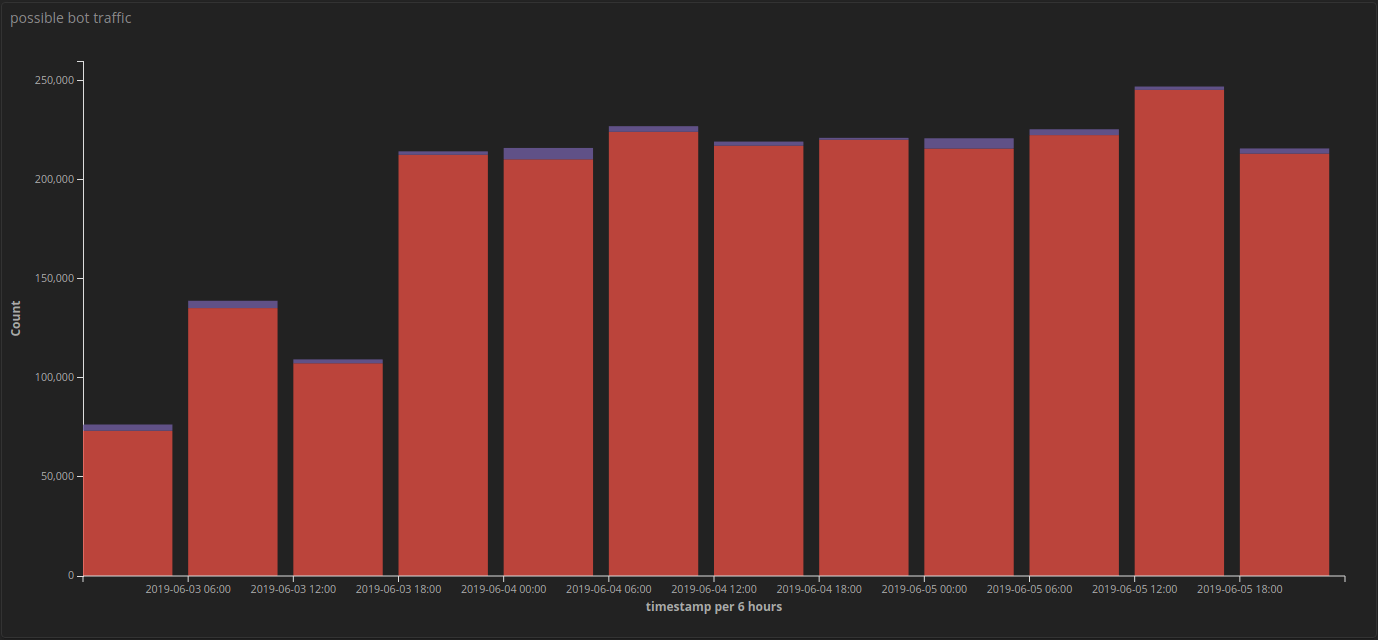

We first classified a portion of the requests to our customer’s application as suspicious using some of the identification techniques discussed in the last section. These included characteristics of the requesting entity like an unusual or outdated User-Agent as well as behaviors which distinguished the entities from normal traffic, like an abnormally high number of login attempts or crawler-like browsing behavior. Over a few days we tracked millions of requests from tens of thousands of unique sources which exhibited this suspicious behavior.

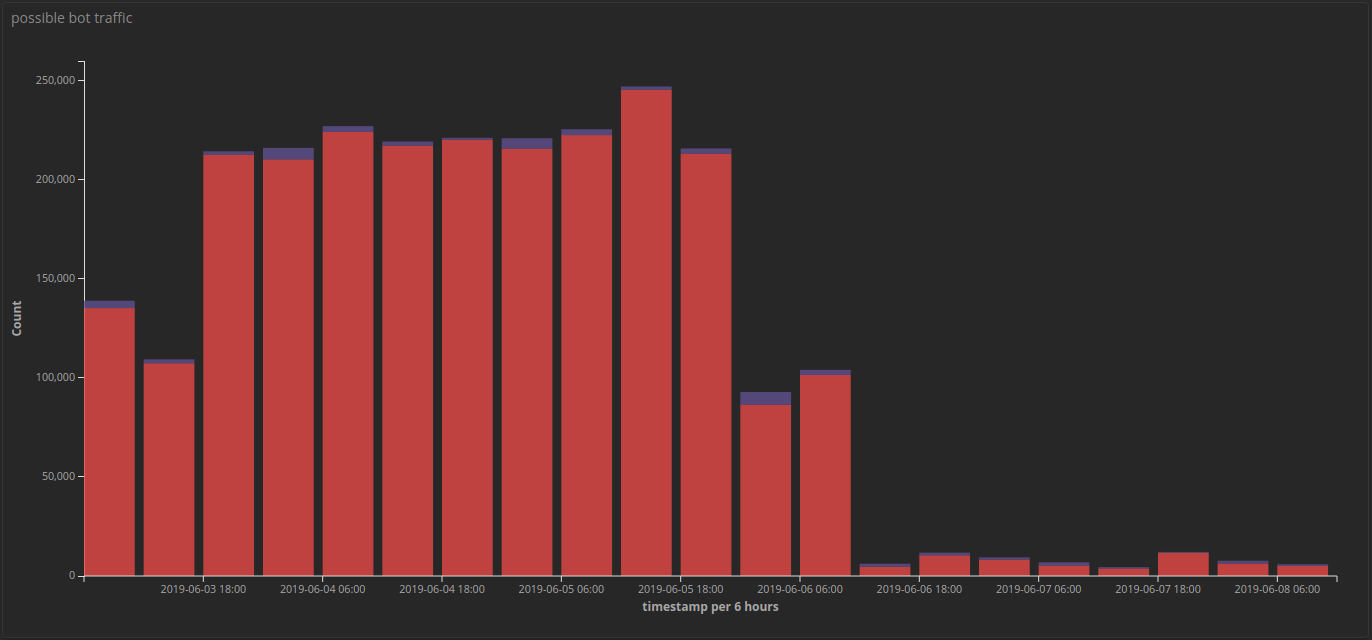

Suspicious requests after adding interrogation, 2019-06-03 – 2019-06-08

Within a few hours the total number of “suspicious” requests to the customer site had been reduced by 95% ‚Äì the remaining traffic either did not meet our initial threshold for interrogation or passed the interrogation, which confirmed them as legitimate users and able to browse the site as normal, without any action required on the users’ part.

Bot Detection with ThreatX

Malicious bots are a serious and growing threat to web applications. The number of advanced bots, which can defeat many security controls, is increasing as these become easier to create and simple bots become less effective. These advanced bots make a strong effort to evade detection while carrying out their attacks, and some classes of their attacks like ATO and credential stuffing may never be identified without advanced techniques.

At ThreatX we combine multiple advanced bot detection techniques like active interrogation, tar-pitting, and behavioral profiling of real traffic to your web application to detect and stop malicious bots, simple and advanced, without impacting the experience for your human users. Learn more about these application security methods, including their effectiveness against common web application attacks in a recent webinar, Lessons from the Front Lines of AppSec.